This means that this script:

![image_thumb[62] image_thumb[62]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEis58BnswLypFUQVth1YCa5NVmiigZMAR3KOXx8cLo-YOKk9TxNXI2ItLcZGsWi8maFhXLvqaG25pudgla3qDZPbyikuEfeTVRlbfCDAPwHqbfRBOhv1R0_1HhBPbfqaBIPs9agX-kBmOsO/?imgmax=800)

will will get the data for the first 10 NuGet packages:

![image_thumb[63] image_thumb[63]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhhqPysESqVk4Kudt87N09MahwdsKkSsw1qCQvOisObz8u7L4gzD_4uU3bf89Zfp_fE7-PJwqOCgUUBSNKwdPPZJVH-448wRQYOie0t9uR3C2a6IWsTk7HXfGTAOUUZlX0NIuhiQqokzFdl/?imgmax=800)

i.e, this will also download of the actual nupkg (the zip with all the code+data files)

![image_thumb[76] image_thumb[76]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEi1m2JW0TfAR-2zk75FJTzix32UQCC8WIrJa-ToRhZiLI-c4vj9yomHdB7okdo0D1bHIlrdvJIra910-HeKOOorvI4qkg-R5yEqqvhJUSMlaq8wGsJyhl8kx3twvFDvbbMw6TH1sk5ztBE-/?imgmax=800)

(also stored in the local computer’s NuGet cache)

![image_thumb[66] image_thumb[66]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhYOJC9aM0-EmFtLS67IR-BU2m4r8lE_GrCvrd5QDxw-HuOp5BWvgN6tjX-axks0MtOssfaqZ7dHGkUpn7YRvr1DVVHxOZXdWFdDJVkjfIFXf8VUO4K0ebBr9SPTFqZQtPBSq9Sh-VxqJRk/?imgmax=800)

This means that with this code:

we can download the entire NuGet library :)

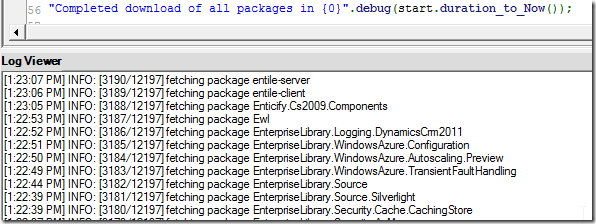

On execution, all metadata will be downloaded first:

![image_thumb[68] image_thumb[68]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgCwPvQdfF1C99mfN9isy0WpxO552Ehr0VeQl6PMKjeP17eO8FUE3fKU05pT3FxpA6aY0A-LURRybscWf5yugs7vlr_6cS3wm_ntGiyv61O-6PDsP1AEO7HdIHEvNyUPCvoByBvk5HaqXJW/?imgmax=800)

which took 1m:32s (to get the information about 12197 packages):

![image_thumb[69] image_thumb[69]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEi7mNRkDy_UpZ4FAhn3r5_7RyHnuHiXQGszJEUk2rsmpF-F4pGJ36dvT5XibVXJzJkU3meSp6Q_2IJCS8vQ0LmEaOP88ThvjQOUtUqwjv4FtStRLhKz5IofZt7I2ix2H2D9HrPG20nvFZSh/?imgmax=800)

and then the download of all packages will start:

![image_thumb[70] image_thumb[70]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiPvRCEiaO0BUQiYg879ZSTyI65fIf1S-ZsbL9PcA-l-TmhR9CdrgsJsB8yU8WuxnJUAbM15fSUe-H0v80ODeKSS81uzu54LRhgMmtaDYPDK6DxM36iCDEPeewq5pSsLjUGNN8JY7TmL6Bi/?imgmax=800)

and take a while:)

Here it is after after 10m (180 Mb downloaded)

![image_thumb[71] image_thumb[71]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEggD3ldOyyOJDbEyQl5xvgy8AFqJ9dE8mNuhJ03O16pVW8x6rWaMvWskB4N-Yx5zSZTxtIWneGKAX83nhYDV7SdU7LDQ4ZhTNhLogkpxg-l6iUEerKWsiYuRsWrvgqirF8sn10hk4_o_KBC/?imgmax=800)

…. after 24m (505 Mb downloaded)

![image_thumb[72] image_thumb[72]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhQb_THdjrxMh1KXg0LHfwUhnTPVrzvORVKOa3WGgmN_NSbFmz0eGAFJtet1fdi7wSbhjic1tvTLWJ2ovxuNuJXWvotUcGHZxIDQFUMPF8lbHDv-AZlIoC20NcvOsq6uI_tQ7iLcaUhXAbg/?imgmax=800)

…after 45m (697 Mbs downloaded)

…after 1h (1Gb downloaded)

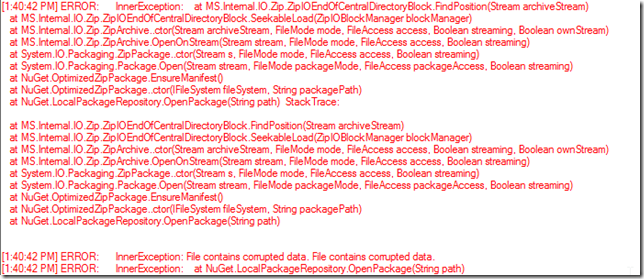

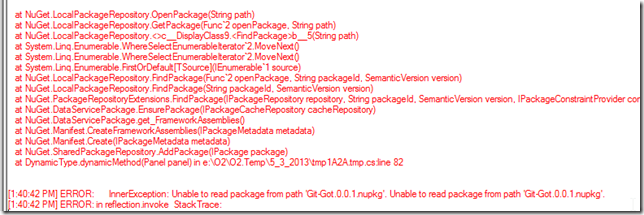

After 1h 2m and 4100 fetched (1.50 GB downloaded) we hit an exception:

So we need to improve our code to handle errors (and cache the packages list)

The code below will open a new C# script editor (with the packages passed as the _package variable)

which can then be used to trigger another fetch:

and here we go again (with the git-got.0.0.1.nupkg error being catched):

…after 15m (1.78 Gb downloaded)

…after 45m (2.13Gb downloaded)

… after 50m (and 2.47Gb downloaded) I had to stop the current download thread (while changing locations)

Starting again on a new network (and physical location)

Finally (after a couple interruptions caused by the VM going to sleep) 3.99 Gb

the 27 errors were caused by network errors, re-running the scan we got 4 errors:

on these packages:

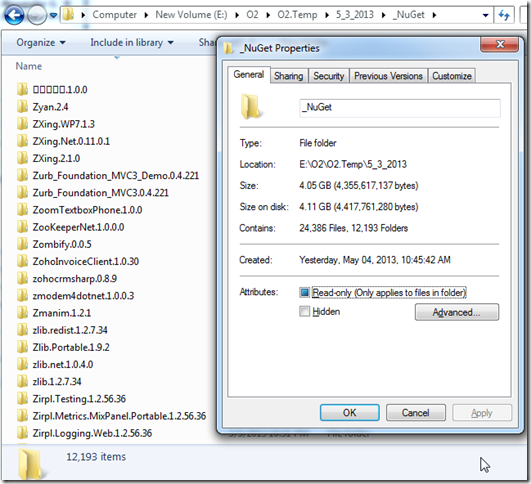

So now we have a 4Gb of cache folder

and 4 GB NuGet archive

each of these 12,193 folders containing an *.nupkg and *.nuspec file

See also Offline copy of the entire NuGet.org gallery. What should I do with these 4.05 Gbs of amazing .Net Apps/APIs?