Note that this is not a simple task, since there are multiple cases where the links are inside <li> tags (with or without text) which will also need to be removed (there was also a problem caused by the fact that the <li> tags were not correctly normalised (as you will see below)

I used the TM Link Status tool since it provides a nice analysis of what needs to be fixed, including REPL environments to script the object created during the 'link analysis' phase'.

Here is the workflow I followed, including some of the probs I had to solve along the way:

1) Downloading and compiling the TeamMentor Links Status tool:

First step was to clone the https://github.com/TeamMentor/UnitTests repo:

... which contain the script that we want to use:

Since the VM I was using didn't have the O2 Platform installed, I quickly downloaded it, and copied the download file into this folder

After first execution there will be a couple temp folders that will exist on the root folder:

To execute the TM - View Library Links Status v1.0.h2 script, all that is needed is to drag-and-drop it into the big O2 Platform logo:

2) Running the TeamMentor Links Status tool:

Here is what the tool should look like when executed for the first time:

On the server TextBox (see above) I added a reference to the the https://tm-34-qa.azurewebsites.net server, and after clicking on the Connect link, I get a list of the libraries that exist on this server:

I then logged into the server (without it I would had not been able to see the article's contents)

... clicked on the Start Analysis link:

... first action performed by the script is to pre-load all 534 articles for analysis:

After the content is fetched:

... the link analysis starts:

... which can also take a little bit since there is a need to make a request to the server asking 'can you resolve this XYZ link':

Once the Analysis is complete, here is what the results looked like for the .NET 2.0 Library:

3) Using the TeamMentor Links Status tool:

As the above screenshot shows, the results are broken down into 3 sections:

- Other links - Internal links and links to external resources

- TM OK - Links to other TeamMentor articles that are OK (i.e. the target was found on this server)

- TM NOT OK - Links to other TeamMentor articles that could not be resolved on this server

The real interesting analysis happens on the TM OK and TM not OK treeviews where each root TreeNode represents a 'linked into' page, and its child nodes represent a 'has link into parent node' page:

So in the screenshot above in the treeview to the left we can see the links that are currently OK (i..e the HTTP Replay Attack page has a link into the parent note page) and the Credentials Dictionary Attack page (on the right) has a broken link into the Guidance: Consider using JAAS for Aut.... page:

A nice way to confirm this is to right-click on one of the links and chose the open in Browser menu item:

... which will open a new popup window with the selected article:

For example, here is an article from the TM not OK List:

... which contains a link:

... to an article that doesn't exist anymore:

4) Scripting the Collected data using a C# REPL

One of the most powerful features of this tool is that it provides a REPL environment already preconfigured with the link's analysis shown in the main UI.

To access it, open the REPL (scripting) menu and chose the REPL Collected Data option:

The popup window is a typical O2 Platform C# REPL environment already populated with a script that provides good clues to the type of data analysis available:

SOURCE CODE GIST: just about all variations of the scripts shown below are available on the gist https://gist.github.com/DinisCruz/8915366 , so take a look if you want to see the C# scripts in more details.

For example there is a dictionary with the url of the broken link as the Key:

... and a list of TM articles as the Value:

This means that the list of articles that is mapped to the url, are the articles that have a link to that URL that will need to be removed.

For example, here are 3 articles that have a link to the selected article GUID:

There is also an provided TeamMentor API that exposes a number of methods that make the manipulation of Article content, really easy. For example here is how to retrieve the HTML content of a particular article:

A nice way to double check that there are indeed links in this content to the mapped GUID, is to open a Code Editor with the contents of an article:

... and do a search for the article GUID (in the case below, available in the Output window)

... which can be easily found using the Code Editor's Search box

5) Using HtmlAgilityPack to find the links to remove

Next step is to find the links to remove, which are in the middle of the articles HTML code, so we need an HTML parser to be able to access them in a programatically way.

O2 already has good support for the HtmlAgilityPack library, which will need to be added as a reference to the current script like this:

Once the reference has been added, we can use extension methods like htmlDocument to create an HtmlAgilityPack HtmlDocument object for an Article's content:

There is also an links() extension method:

... which returns a list of HtmlNode objects:

For reference, there is how these links are calculated:

Back to the script, once we have links, its time to look at their outerHtml:

The next step was to add the logic to search for a particular link (note that the notokList dictionary Key is of the format: link:::title )

The script below does a search for the first item in notokList:

.... which is this link:

Just to confirm that all is ok, lets open a quick WebBrowser with Code Editor below:

... set the text of the Browser and Code Editor to the Html to process, and search for the link to remove:

5) Viewing link's data in a DataGridView:

In order to gain a better understanding of what needs to be done, and to have a visual representation of the links to remove, a temporary DataGridView will be used to map that data.

But before, some refactoring was done to extract the Html of the link to remove (including an extra feature to return both the Html of the found link and the html of its parent (which in most cases was a <li> tag):

Then a temp popupWindow was created, containing a DataGridView with 4 columns:

The last line of the script show above adds one Row to the DataGridView, which looks like this:

Here is a more complete script that adds one row per link to remove:

... which looks like this when executed:

... expanding the Html To Remove (Parent Node) column:

... allow us to see there is going to be a problem with the HTML fixes.

There are <li> tags that are not correctly terminated. I.e. the 2nd <li> tags starts before the first one was closed:

... with the </li> tag closures all happening at the end:

This problem can be easily seen if we look at one of these link + parent HTML in isolation:

Note how the the link to remove (first section of Output data) is inside a series of <li> tags (2nd section) which are only terminated at the end (3 section (using tidy Html))

Here is an example of an easy fix:

... since there is only only <li> .. </li> tag sequence:

5) Handing the links <LI> tag problem:

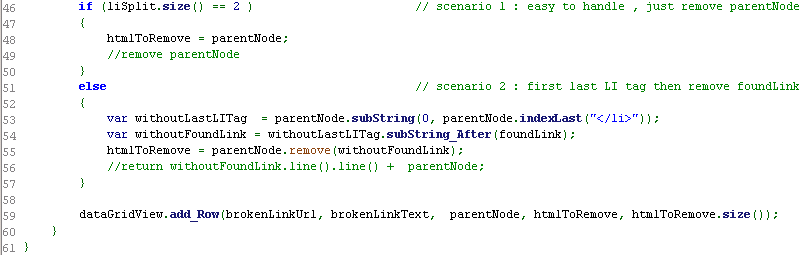

To address this issue, the script will have to handle the case when there are more than one <LI> in the outerHtml of the link element to remove.

Here is my first attempt at doing that:

Note how in the Output below, the link was correctly removed from the top and its </li> tag from the end:

To see if the formula worked on all links, the DataGridView was used again to visualise the content to remove:

... which looked like this when executed:

Before articles content are changed its good to add a bit of logging:

... do a dry run:

Here is how the script looks at the moment:

Note the extra check done at the before and after the replace happens (image above and below)

6) Changing article content

Using the provided API, it is very easy to change an article's Html.

It is just a case of calling the content() extension method with the new HTML (or WikiText/Markdown) content as the first parameter:

For reference here is the TeamMentor API code that calls the WebService method to update an Article's Content:

After execution, we get an info message confirming the change:

... and the changed article can be seen on the Output window:

Opening up the TeamMentor Tbot debug page will show that there were the following sequence of events:

- an GuidanceItem (i.e. Article) was saved

- a Git Add command was performed (in TM server s)

- a Git Commit was performed (in TM server s)

- a Git Push was triggered (from (in TM server to GitHub)

A quick look at GitHub confirms this:

7) Confirming that the changes were correctly done

Here is the older version of an TM article (note the Buffer Overflow Attack link)

Here is the updated version (note the lack of Buffer Overflow Attack link)

As another example, note the DNS Cache Poisoning link

... which doesn't exists in the 'fixed' version:

Here is a nice commit that shows another case of removing the DNS Cache Poisoning link :

Here are multiple remove examples (showing the multiple variations that needed to be handled by the 'remove script')

In the example below, note how a number of links were removed from the LDAP Injection article

... don't exist on the fixed version:

The final step is to rerun the analysis and see if the fix happened ok:

And the good news is that the new execution now show 0 Not OK links

... while keeping the previews results for Other Href (591) and TM OK (149)

Here is the original screenshot (shown in the beginning of this post) for comparison :)

That's it, the rest of the post cover contains a couple corner cases and information.

Appendix A: if the TM - View Library Links Status v1.0.h2 doesn't compile ok

...drag the script into an C# REPO editor:

.... and add a using reference to the FluentSharp.For_HtmlAgilityPack namespace (see below)

Appendix B: If the preload of articles is taking too long, it might be due to the activity Log messages.

Like these:

To remove this temporary, run the command on the TM Web C# REPL environment:

Appendix C: If when opening the articles in the Embedded Browser the following IE errors shows:

... that usually means that the TM website will need to be added to the IE trusted sites security zone

Appendix D: If the commits with changes look like this:

Then that is because there is a mismatch between the chars used for line-end on the server and at GitHub. The solution is to do a content save with no changes (i.e call tmArticle.content(tmArticle.content()) in order to trigger a save with the correct line-ending chars.

Appendix E: If the HTML contents of the client side tool need to be removed, just assign the article.Content to null and call the refreshContents extension method: